We live in the cognitive age—an era when we will begin replicating, and exceeding, the prowess of the human mind in specific domains of expertise. While the implications of artificial intelligence (AI) are broad, as we head deeper into this new era, we will find that AI combined with myriad exponential technologies will carry us inexorably toward a different form of warfare that will unfold at speeds we cannot fully anticipate—a form of warfare we call hyperwar. Historically, the balance of power between belligerents has been dictated in great measure by the relative size of their armies. Knowledge of terrain, skill, and technology all have been multipliers for smaller forces, but quantity has had a quality all its own. If one sets aside consideration of nuclear weapons, which allow small states such as Israel and North Korea to hold their opponents at bay, the outcomes of conventional conflicts are determined primarily by a country’s ability to field a larger force, sustained over a longer period of time—the costs of which are enormous.

We live in the cognitive age—an era when we will begin replicating, and exceeding, the prowess of the human mind in specific domains of expertise. While the implications of artificial intelligence (AI) are broad, as we head deeper into this new era, we will find that AI combined with myriad exponential technologies will carry us inexorably toward a different form of warfare that will unfold at speeds we cannot fully anticipate—a form of warfare we call hyperwar. Historically, the balance of power between belligerents has been dictated in great measure by the relative size of their armies. Knowledge of terrain, skill, and technology all have been multipliers for smaller forces, but quantity has had a quality all its own. If one sets aside consideration of nuclear weapons, which allow small states such as Israel and North Korea to hold their opponents at bay, the outcomes of conventional conflicts are determined primarily by a country’s ability to field a larger force, sustained over a longer period of time—the costs of which are enormous.

The arrival of artificial intelligence on the battlefield promises to change this. Autonomous systems soon will be able to perform many of the functions historically done by soldiers, whether for intelligence analysis, decision support, or the delivery of lethal effects. In fact, if developers of these technologies are to be believed, their systems may even outperform their human competition. As a consequence, the age-old calculation that measures a country’s basic military potential by estimating the number of able-bodied individuals capable of serving no longer may be reliable in determining the potency with which a country can project power.

With the emergence of AI and autonomy, the United States must adjust how it views the balance of power in the coming decades, as well as its understanding of its geopolitical future.

Adapting quadcopters to carry explosives is just one way insurgents have weaponized inexpensive commercial technology. The addition of artificial intelligence will make them ever more potent.

Six Key Implications

The broad impact of large-scale use of autonomous military systems will not be insignificant. Specifically:

• Small, rich countries with great power ambition will shape geopolitics significantly.

• Asymmetric, insurgent use of autonomous technologies will disrupt and potentially destabilize countries incapable of mounting sophisticated, preemptive counters.

• Regional powers afforded protection by their own nuclear umbrellas will have greater freedom of action against rivals without crossing nuclear thresholds.

• Peer competitors with large, aging conventional forces will be able to amplify their conventional potential by rapidly and cheaply modernizing these assets. In many cases it will be possible to leapfrog newer technology by integrating autonomy into older platforms.

• The assurance and expectation of privacy may collapse in many parts of the world. AI will make ever larger data-gathering efforts viable, practical, and beneficial to intelligence services. Because these activities will take place even when there are no formal hostilities, the question of privacy will become paramount. And where automatically interpreted data begins to be acted on without human intervention, new questions will be raised.

• Technological advances to amplify the precision of autonomous platforms and an increased level of risk tolerance to their loss should lead to lower noncombatant casualties, but this also may make conventional conflicts easier to sell in political terms.

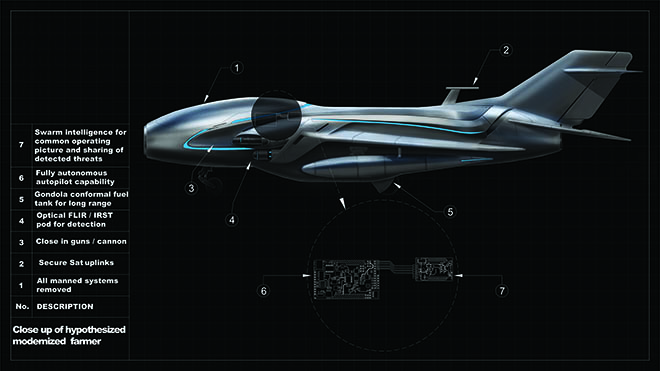

This hypothesized drone would include swarm intelligence for a common opertating picture and sharing of detected threats; a fully autonomous autopilot capability; a gondola conformal fuel tank for long range; optical forward-looking infrared/infrared search-and-track pod for detection; close-in guns/cannon; and secure satellite uplinks.

Small, rich, and powerful

Recent conflicts in the Middle East have shown that countries such as the UAE and Qatar, while small in absolute terms, can have an outsized effect on a region. This is partially because of their employment of technology and the considerable resources they are able to expend on such initiatives.

The coalition opposed to Qatar, for example, accused Qatar of sowing discord and undermining the region by leveraging information assets such as the Al-Jazeera network. The UAE, on the other hand, is seen to project its military power into distant theaters, such as Libya, through the leverage of contracted forces and a small number of technologically advanced assets. These examples show that when resources, technological capability, and political will come together, a country with a small population can become a significant influencer of regional affairs.

In the age of hyperwar, where the speed of conflict is enormously accelerated, and with autonomous weapons making human force size less critical, this impact will be multiplied many times over.

Imagine long-range autonomous combat aircraft penetrating enemy airspace and punishing rivals, or minimally manned helicopter-carrier-sized expeditionary ships equipped with autonomous aircraft ready to project power at distant points on the globe. These barely manned fleets, networked through AI-controlled sensor platforms and autonomous decision support, would be protected by autonomous undersea assets and corvette-sized craft that fend off aerial, subsurface, and surface threats. AI-powered robotic “dark factories” could produce these mechanically simple systems controlled with incredibly sophisticated software.

In the wars of the future, the ability of rich countries to attract key talent, acquire intellectual property developed elsewhere, and invest in capital goods to create these multidomain autonomous force capabilities will be a huge factor—not population.

Insurgent use of Autonomy

In the Yemeni, Syrian, and Afghan conflicts, small, inexpensive commercial drones have been used as flying improvised explosive devices, propaganda tools, and intelligence, surveillance, and reconnaissance assets. In Iraq, ISIS terrorists demonstrated their ability to quickly assemble and deploy remote-controlled quadcopters as “grenade bombers.” 1 While these drones were not artificially intelligent themselves or part of an artificially intelligent network, such systems likely will be in the future, as AI capabilities such as image recognition and autonomous flight control become more common.

The availability of such systems only will increase. Reports indicate that improvised weapons, including unmanned aerial vehicles, are available for sale on a number of underground online weapons markets. Using additive manufacturing, small groups of individuals might be able to “print” and assemble robotic aviation capabilities and, eventually, perhaps a mechanized ground task force to carry out a specific mission.

This phenomenon of beginning with off-the-shelf, dual-use, consumer-grade items such as 3D printers, inexpensive microcontrollers, and cell phones and ending with military-grade special operations and relatively long-range air and surface capabilities will be magnified over time. As the commercial technology they weaponize becomes less expensive and open initiatives to develop autonomous flight-control software become more well known and accessible to anyone with an internet connection, insurgents will have a greater opportunity to acquire and experiment with such systems in a growing number of scenarios and to improve tactics.

So how can such actions be characterized? The recent attack by unknown assailants against the Russian Hmeymim airbase in Syria is one example. Thirteen drones carrying what have been variously characterized as bombs or mortar rounds attempted to attack the airbase and the neighboring Russian naval base at Tartus. Though the attack was coordinated, it is unlikely the drones were being controlled by a single, AI-based swarming algorithm. The Russians were able to defeat the attack using a combination of ZSU-23 antiaircraft defenses and electronic warfare systems. But what would have happened were a larger attack coordinated with more sophisticated AI-based control?

Conventional antiaircraft capabilities and radar infrastructure are not designed to deal with a large number of small craft flying at low altitudes. Law enforcement and military forces will need new ways to counter these coming trends, and a new view of defending public spaces in the domestic context will need to be considered.

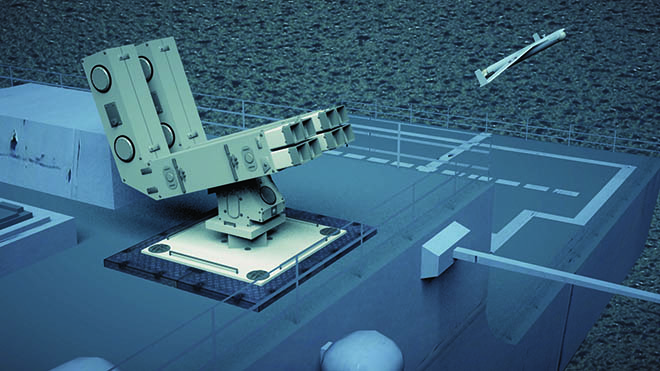

Modernizing U.S. defense acquisition policy to take AI and autonomous systems into account—here, a notional shipboard drone launcher—is vital.

Regional powers and nuclear umbrellas

The lesson North Korea learned from the fall of Saddam Hussein in Iraq and the demise of other dictators before and after him is that nuclear weapons are the ultimate insurance. If you have nuclear weapons, you can short-circuit the grand alliances the West puts together to punish a noncompliant regime. The North Koreans have, thus far, been proven right in this hypothesis. Despite repeated and threatening missile testing, verbal attacks against the United States, and charges of terrorism and/or homicide, no kinetic action has been taken against them.

Notwithstanding President Donald Trump’s newfound interest in diplomacy with Kim Jung Un, many analysts are of the opinion that the world will have to live with a nuclear North Korea. With an intercontinental ballistic missile (ICBM) capability that puts the entire United States in range and a nuclear arsenal sufficient to ensure that antiballistic-missile shields no longer can guarantee protection, North Korea cannot be treated lightly.

Given the situation in the Middle East, it is likely Iran would move quickly to acquire nuclear capability if the 2015 nuclear deal collapses. U.S. intelligence assessments regarding how long it would take North Korea to acquire nuclear and ICBM capability were quite inaccurate; the Kim regime acquired both capabilities sooner than expected. Could this occur in the case of Iran, too? And if so, what of Saudi Arabia, Turkey, and perhaps even the UAE? King Abdullah has said Saudi Arabia will not be number two in a regional nuclear arms race behind the Iranians.

It would be irresponsible to expect no further nuclear proliferation to occur. The North Korean scenario multiplied fivefold, in different parts of the world, is something to consider. Would all these countries use their nuclear umbrellas to increase their freedom of action? Probably.

AI to revitalize conventional forces

For years, the People’s Liberation Army Air Force (PLAAF) has been converting 1950s- and 1960s-era jets such as the J-6 (Chinese version of the MiG-19) and J-7 (Chinese version of the MiG-21) into autonomous drones. It is not clear how the Chinese intend to employ these systems—hypotheses range from using them as a “first wave” to saturate opposing air defenses to more fanciful hypotheses involving air-to-air combat. Regardless of where the capabilities of these systems fall, what is clear is that a reimagining of otherwise obsolete equipment, married with upgraded sensors, autonomy, and even AI-based control algorithms, promises to open new avenues for near-peer states to rapidly field significant capability.

Adding sensors and control to “dumb” systems is not a new strategy for the U.S. Air Force, which added “kits” such as the Boeing Joint Direct Attack Munition (JDAM) kit to unguided bombs, transforming them into smart, precise, GPS-guided munitions. These were used to great effect during the most recent Gulf War. Today, many manufacturers, such as Turkey’s Turkish Aerospace Industries, develop such “bolt-on” kits to upgrade the thousands of unguided bombs in global armories.

Research is demonstrating the value of machine learning in synthesizing data from multiple sensors to increase the detection accuracy and range of conventional systems such as sonar. Specialized machine-learning algorithms and the weaponization of data can come together to enable quick, inexpensive upgrades that significantly enhance the capability of legacy systems. And because software can be modified, adapted, and developed quickly, we are entering an era where conventional systems can be evolved without long engineering cycles. The speed with which a motivated adversary can imbue new capabilities and revitalize existing weapon systems will put pressure on the defense acquisition practices and policies that were largely crafted for an era that is now history. While innovation always will be valuable, at this point in history, integration of existing capabilities carries ominous potential.

The need to modernize and change defense acquisition policy as a consequence of the AI revolution is not a major thrust of most mainstream defense thought pieces today, but perhaps it should be.

Is Ensuring privacy impossible?

Earlier this year, China launched a new big-data population monitoring system designed to evaluate citizens’ loyalty to the state. This system of “social credits” reportedly will take into account every Chinese citizen’s online activity, shopping patterns, participation in protests, commercial and legal records, and much more. Citizens found to have a low social credit score will face automatically applied penalties. For example, they may be denied a loan application, or their ability to board a domestic or international flight may be curtailed. For abysmally low scores, what might the consequences be? Perhaps incarceration?

Given the gargantuan amount of data that must be analyzed to implement such a holistic monitoring system for 1.4 billion people, it should be no surprise that much of the monitoring, collection, interpretation, and scoring will be automated and handled by AI algorithms. It also should not be revelatory that for routine, high-volume actions that are likely to be taken by such a system—for example, denying a loan application above a certain amount—automation will be the only practical recourse.

This Chinese social credit system is a large-scale example of AI automating the decision-action loop in a nonkinetic domain. Many countries around the world are interested in technology of this nature. Given China’s willingness to export dual-use products, it is likely many other governments soon will be able to access similar systems.

What becomes of privacy in this new era? What of the expectation of anonymity in certain contexts? Against the backdrop of the Cambridge Analytica, Facebook, and other privacy debates, one may find some hope in that society appears to be pushing back against creeping surveillance. However, the realist may wonder whether most people simply will become immune to their loss of privacy, while the de facto trend leads to an era where—at least in some parts of the world—nearly every expression and action is monitored and automatically analyzed.

While it can be argued that this is a natural progression in terms of authoritarian states using technology to amplify their hold on the population, beyond a certain point automation is capable of altering fundamental social dynamics. For example, there is a difference in an authoritarian state that can tap a few thousand telephone lines and monitor a few thousand individuals and one that can monitor everyone, all the time. In the former, there is space for dissent and alternate opinions to take root; there is the potential for change and a chance for some privacy and anonymity. In other words, there is some hope for a dissenter. As this space shrinks, with always-on AI surveillance, will there be any ability to muster dissent? Any space for contrary opinions?

Perhaps most worryingly, will gamified systems such as a social score reprogram the citizenry en masse to become more compliant than ever before?

Containing human loss, enabling War

The fact that autonomous weapon systems means humans no longer are in harm’s way is a good thing. But the worry surrounding this more “antiseptic” form of warfare is that war may become more likely as losses—of machines, not humans—become more “thinkable,” even acceptable. Regardless, autonomy is coming to weapon systems. The benefits enabled by a tightening of the observe, orient, decide, act (OODA) loop are so significant and can enable such benefits in battle that they cannot be overlooked or ignored. 2

The nature of hyperwar is not defined by autonomous weapons alone. Hyperwar as a concept and a reality emerges from the speed of future war, which comes in almost equal parts from near to actual real-time AI-powered intelligence analysis and AI-powered decision support in command and control, right up to and potentially including where a human figures in the decision making. The string of detect, decide, and act—even when acting is a combination of human-controlled or semiautonomous systems—will be accelerated immeasurably by AI.

When one considers the changing world order—the strong drift toward multipolarity and new power centers acting out on the world stage—together with the rapid advances in autonomous systems, the clear implication is that certain kinds of campaigns and missions may become “easier” for political and military leaders to justify. Will the consequences of these missions and campaigns always remain contained? Will the increased freedom of action that comes when losses become more acceptable be abused to the point where we are likely to face greater conflict and escalatory reprisals? This remains to be seen. But it is, nonetheless, an outcome for which the United States must begin to prepare.

Next Steps

To plan for the world ahead, a holistic assessment that fuses consideration of technological and geopolitical change is necessary. By analyzing the rate and direction of advancement in exponential technologies such as AI and considering this together with clear shifts developing in the world order, we can draw inferences to which our planners and strategists must begin to respond. The pace of change in the cognitive age in which we now live is considerably faster than what we experienced in the information age or the industrial age before it. Relative advantage can shift quickly as “software eats the world” and value shifts to the ephemeral and easily replicable.

The best way to adapt to change is to stay nimble and to ramp up investments in learning, research, and development. These investments can supply the knowledge and intellectual property resources that we can begin to direct to create the “10X” advantage of which Army Chief of Staff General Mark Milley has spoken. 3 Certainly, near-peer competitors, emerging regional powers, criminal groups and even super-empowered individuals will not sit on the sidelines for long. The capabilities and scenarios discussed here will materialize. The question is, will this be to the United States’ benefit or to its peril?

1. Kelsey D. Atherton, “ISIS Is Dropping Bombs with Drones in Iraq,” Popular Science , 16 January 2017.

2. As we explained in “On Hyperwar,” U.S. Naval Institute Proceedings 143, no. 7 (July 2017).

3. Matthew Cox, “ Army Chief: Modernization Reform Means New Tanks, Aircraft, Weapons ,” Military.com, 10 October 2017.

General Allen has served in a variety of command and staff positions in the Marine Corps and the joint force, including as Special Presidential Envoy for the Global Coalition to Counter the Islamic State of Iraq and Levant, Commander of the NATO International Security Assistance Force in Afghanistan, and Deputy Commander, U.S. Central Command. He serves on the board of directors of SparkCognition. He was the corecipient of the 2015 Eisenhower Award of the Business Executives for National Security.

Mr. Husain , recognized as Austin’s Top Technology Entrepreneur of the Year and one of Onalytica’s Top 100 global artificial intelligence influencers, is a serial entrepreneur and inventor with more than 50 filed patents. He is the founder and chief executive officer of SparkCognition, an award-winning machine-learning/AI-driven cognitive analytics company.

No comments:

Post a Comment