by Crispin Rovere

Graham Allison alerts us to artificial intelligence being the epicenter of today’s superpower arms race.

Graham Allison alerts us to artificial intelligence being the epicenter of today’s superpower arms race.

Drawing heavily on Kai-Fu Lee’s basic thesis, Allison draws the battlelines: the United States vs. China, across the domains of human talent, big data, and government commitment.

Allison further points to the absence of controls, or even dialogue, on what AI means for strategic stability. With implied resignation, his article acknowledges the smashing of Pandora’s Box, noting many AI advancements occur in the private sector beyond government scrutiny or control.

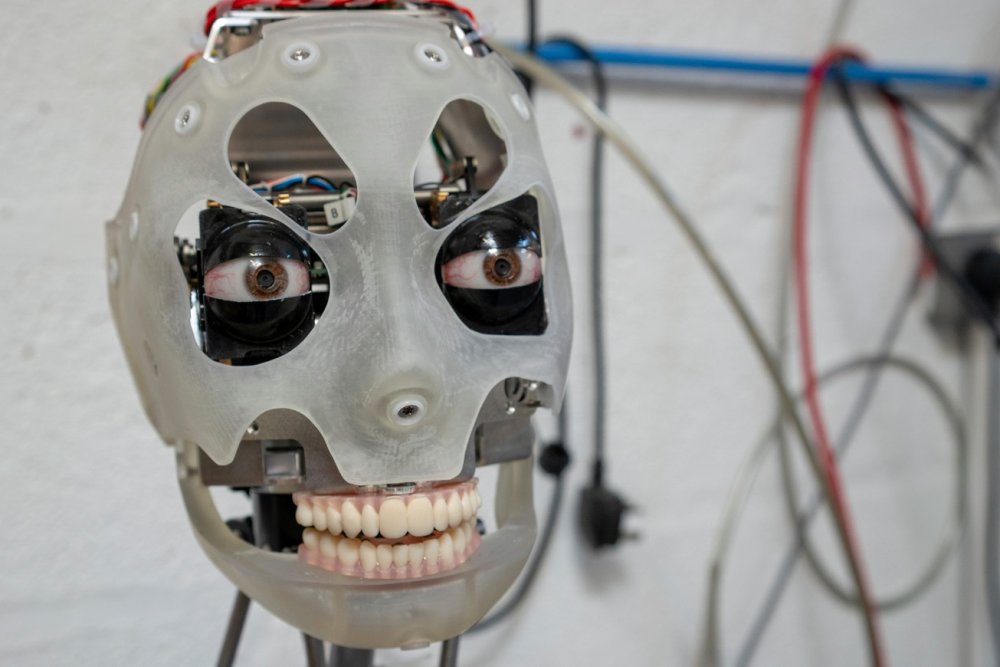

However, unlike the chilling and destructive promise of nuclear weapons, the threat posed by AI in popular imagination is amorphous, restricted to economic dislocation or sci-fi depictions of robotic apocalypse.

Absent from Allison’s call to action is explaining the “so what?”—why does the future hinge on AI dominance? After all, the few examples (mass surveillance, pilot HUDs, autonomous weapons) Allison does provide reference continued enhancements to the status quo—incremental change, not paradigm shift.

As Allison notes, President Xi Jinping awoke to the power of AI after AlphaGo defeated the world’s number one Go human player, Lee Sedol. But why? What did Xi see in this computation that persuaded him to make AI the centerpiece of Chinese national endeavor?

The answer: AI’s superhuman capacity to think.

To explain, let’s begin with what I am not talking about. I do not mean so-called “general AI”—the broad-spectrum intelligence with self-directed goals acting independent of, or in spite of, preferences of human creators.

Eminent figures such as Elon Musk and Sam Harris warn of the coming of general AI. In particular, the so-called “singularity,” wherein AI evolves the ability to rewrite its own code. According to Musk and Harris, this will precipitate an exponential explosion in that AI’s capability, realizing 10,000 IQ and beyond in a matter of mere hours. At such time, they argue, AI will become to us what we are to ants, with similar levels of regard.

I concur with Sam and Elon that the advent of artificial general superintelligence is highly probable, but this still requires transformative technological breakthroughs the circumstances for which are hard to predict. Accordingly, whether general AI is realized 30 or 200 years from now remains unknown, as is the nature of the intelligence created; such as if it is conscious or instinctual, innocent or a weapon.

When I discuss the AI arms race I mean the continued refinement of existing technology. Artificial intelligence that, while being a true intelligence in the sense of having the ability to self-learn, it has a single programmed goal constrained within a narrow set of rules and parameters (such as a game).

To demonstrate what President Xi saw in AI winning a strategy game, and why the global balance of power hinges on it, we need to talk briefly about games.

Artificial Intelligence and Games

There are two types of strategy games: games of “complete information” and games of “incomplete information.” A game of complete information is one in which every player can see all of the parameters and options of every other player.

Tic-Tac-Toe is a game of complete information. An average adult can “solve” this game with less than thirty minutes of practice. That is, adopt a strategy that no matter what your opponent does, you can correctly counter it to obtain a draw. If your opponent deviates from that same strategy, you can exploit them and win.

Conversely, a basic game of uncertainty is Rock, Scissors, Paper. Upon learning the rules, all players immediately know the optimal strategy. If your opponent throws Rock, you want to throw Paper. If they throw Paper, you want to throw Scissors, and so on.

Unfortunately, you do not know ahead of time what your opponent is going to do. Being aware of this, what is the correct strategy?

The “unexploitable” strategy is to throw Rock 33 percent of the time, Scissors 33 percent of the time, and Paper 33 percent of the time, each option being chosen randomly to avoid observable patterns or bias.

This unexploitable strategy means that, no matter what approach your opponent adopts, they won't be able to gain an edge against you.

But let’s imagine your opponent throws Rock 100 percent of the time. How does your randomized strategy stack up? 33 percent of the time you'll tie (Rock), 33 percent of the time you'll win (Paper), and 33 percent of the time you'll lose (Scissors)—the total expected value of your strategy against theirs is 0.

Is this your “optimal” strategy? No. If your opponent is throwing Rock 100 percent of the time, you should be exploiting your opponent by throwing Paper.

Naturally, if your opponent is paying attention they, in turn, will adjust to start throwing Scissors. You and your opponent then go through a series of exploits and counter-exploits until you both gradually drift toward an unexploitable equilibrium.

With me so far? Good. Let's talk about computing and games.

As stated, nearly any human can solve Tic-Tac-Toe, and computers solved checkers many years ago. However more complex games such as Chess, Go, and No-limit Texas Hold’em poker have not been solved.

Despite all being mind-bogglingly complex, of the three chess is simplest. In 1997, reigning world champion Garry Kasparov was soundly beaten by the supercomputer Deep Blue. Today, anyone reading this has access to a chess computer on their phone that could trounce any human player.

Meanwhile, the eastern game of Go eluded programmers. Go has many orders of magnitude more combinations than chess. Until recently, humans beat computers by being far more efficient in selecting moves—we don't spend our time trying to calculate every possible option twenty-five moves deep. Instead, we intuitively narrow our decisionmaking to a few good choices and assess those.

Moreover, unlike traditional computers, people are able to think in non-linear abstraction. Humans can, for example, imagine a future state during the late stages of the game beyond which a computer could possibly calculate. We are not constrained by a forward-looking linear progression. Humans can wonderfully imagine a future endpoint, and work backwards from there to formulate a plan.

Many previously believed that this combination of factors—near-infinite combinations and the human ability to think abstractly—meant that go would forever remain beyond the reach of the computer.

Then in 2016 something unprecedented happened. The AI system, AlphaGo, defeated the reigning world champion go player Lee Sedol 4-1.

But that was nothing: two years later, a new AI system, AlphaZero, was pitched against AlphaGo.

Unlike its predecessor which contained significant databases of go theory, all AlphaZero knew was the rules, from which it played itself continuously over forty days.

After this period of self-learning, AlphaZero annihilated AlphaGo, not 4-1, but 100-0.

In forty days AlphaZero had superseded 2,500 years of total human accumulated knowledge and even invented a range of strategies that had never been discovered before in history.

Meanwhile, chess computers are now a whole new frontier of competition, with programmers pitting their systems against one another to win digital titles. At the time of writing the world's best chess engine is a program known as Stockfish, able to smash any human Grandmaster easily. In December 2017 Stockfish was pitted against AlphaZero.

Again, AlphaZero only knew the rules. AlphaZero taught itself to play chess over a period of nine hours. The result over 100 games? AlphaZero twenty-eight wins, zero losses, seventy-two draws.

Not only can artificial intelligence crush human players, it also obliterates the best computer programs that humans can design.

No comments:

Post a Comment